During Google Health’s Check Up event last year, we introduced Med-PaLM 2, a large language model (LLM) tailored for healthcare. This research has since been made available to various global organizations working on solutions for tasks like improving nurse handoffs and aiding clinicians with documentation. Towards the end of last year, we unveiled MedLM, a series of foundational models for healthcare based on Med-PaLM 2, which is now more widely accessible through Google Cloud’s Vertex AI platform.

Since then, progress has been made on generative AI for healthcare, ranging from advancements in training health AI models to novel uses of AI in the healthcare sector.

Innovations in Healthcare Model Modalities

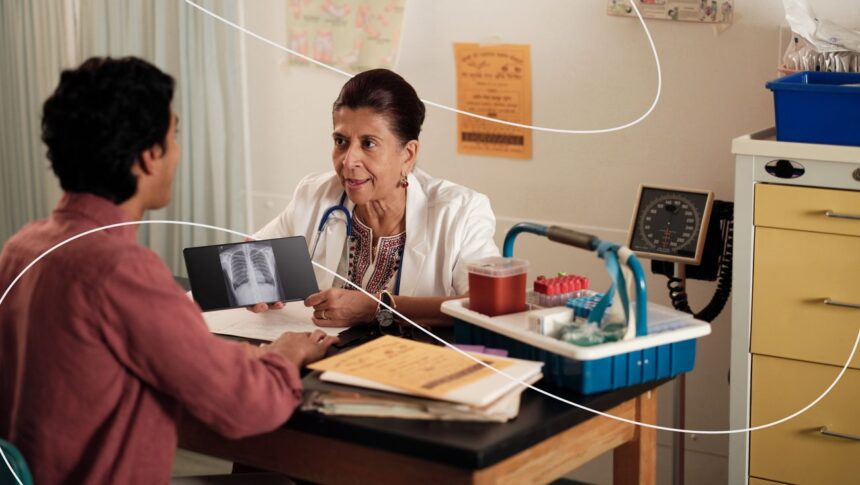

The field of medicine comprises diverse information sources stored in various formats such as radiology images, lab results, genomics data, and environmental context. To gain a comprehensive understanding of an individual’s health, it is crucial to develop technology that can interpret this spectrum of information.

We are enhancing our models with new capabilities to make generative AI more beneficial for healthcare organizations and individuals. We recently introduced MedLM for Chest X-ray, which holds the potential to revolutionize radiology workflows by aiding in the classification of chest X-rays for various applications. Beginning with Chest X-rays, crucial for identifying lung and heart conditions, MedLM for Chest X-ray is currently accessible to selected testers in an experimental preview on Google Cloud.

Research on Refining Models for the Medical Field

The healthcare industry is responsible for roughly 30% of the world’s data volume, with data generation rates increasing by 36% annually. This data encompasses extensive text, images, audio, and video, often containing vital patient information buried deep within medical records, posing challenges in swift information retrieval.

Consequently, we are investigating how a specialized version of the Gemini model, fine-tuned for the medical domain, can unlock new capabilities for advanced reasoning, contextual understanding, and processing various data types. Recent research has shown exceptional performance in assessments like the U.S. Medical Licensing Exam (USMLE)-style questions at 91.1% and a video dataset named MedVidQA.

By leveraging multimodal Gemini models, we have extended the use of these refined models to other clinical benchmarks, including interpreting chest X-ray images and genomics data. Promising results have been observed in tasks like report generation for 2D images such as X-rays and 3D images like brain CT scans, marking a significant advancement in medical AI capabilities. While this research is ongoing, the potential for generative AI in radiology to offer supportive tools to healthcare institutions is promising.

Personalized Health AI Model for Tailored Coaching and Recommendations

Fitbit and Google Research have collaborated to develop a Personal Health Large Language Model aimed at enhancing personalized health and wellness features within the Fitbit mobile app. This model is designed to provide tailored coaching features, such as actionable messages and advice, customized based on individual health and fitness goals. For instance, the model can analyze variations in sleep patterns and quality to recommend adjustments in workout intensity based on these insights.