The familiar noise your Pixel emits when you snap a photo? Surprisingly, it’s not a camera shutter sound. Conor O’Sullivan, the head of Pixel’s sound design team, reveals that it’s actually the sound of scissors opening and closing. “It’s not a direct recording of that action, but that’s the foundation of the sound you hear today,” he explains.

When shaping the auditory experience of your Pixel, Conor and his team don’t just aim to grab your attention; they seek to convey a message through sound. Conor describes sound design as the art of creating deliberate sounds with context.

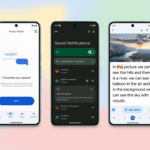

Explore how the Pixel sound design team draws inspiration, develops, tests, and integrates the sounds that your Pixel produces.

The importance of sound design

Sound design for devices is becoming increasingly essential due to the heavy reliance on our visual senses. “With so much information available on a smartphone screen, we don’t want to overwhelm any one sense,” Conor points out.

Sound can effectively convey additional information – for example, Pixel uses distinct sounds to differentiate between a text message and a call, or sending and receiving an email. The sound of an AMBER alert on your phone likely evokes a different response compared to a notification about an incoming email. “Sound can convey emotion effectively,” Conor explains. “It can express positivity, negativity, or a sense of urgency.”

Designers develop various types of sounds for Pixel, including gesture feedback sounds that confirm user actions in response to user interface elements, semantic feedback sounds that confirm user selections and actions, and attention sounds that Pixel generates independently, like alarms. The team considers both form and function during the design process: Form refers to the aesthetic aspect – how it evokes emotions and whether it feels playful or human-like. Function addresses its purpose – such as waking someone up or indicating that a timer is complete – and how effectively it fulfills that role.

Sound designer Harrison Zafrin contributed to the sounds for Guided Frame, a Pixel camera tool for assisting visually impaired users in taking selfies. This tool effectively balances form and function by combining haptics (tactile feedback) with sound to help users position their hand and the camera correctly. Harrison explains, “We developed a system with five zones around the viewfinder where you hear a progressively positive musical sequence as you move towards the center, culminating in a celebratory sound when you’re centered. We aimed to make the sound and haptics both delightful and distinctly Google-like while effectively conveying the necessary information to help users capture the perfect shot.”