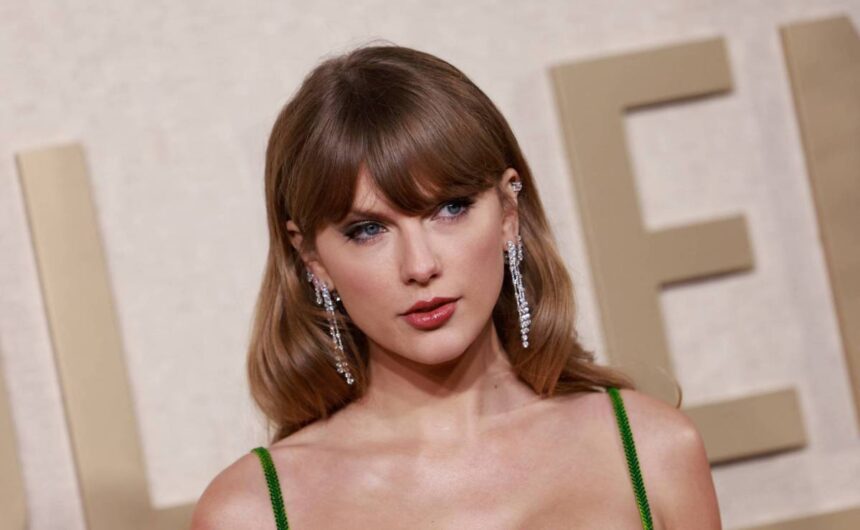

Recently, explicit deepfake videos of Taylor Swift circulated on X, raising concern among fans. One of the posts garnered over 45 million views, 24,000 reposts, and hundreds of thousands of likes before being removed.

Taylor Swift has a massive and dedicated fanbase, known as Swifties. These fans are now taking swift and decisive action in response.

Large fandoms, like the Swifties and K-pop fans, have shown their collective power in the past. For instance, K-pop fans reserved hundreds of tickets to a Donald Trump rally to reduce the attendance numbers. Some observers have even speculated about the potential influence of Swifties as a voting bloc in the upcoming U.S. presidential election.

However, the current focus for Swifties is on containing and eliminating the nonconsensual deepfake videos of the singer. They are flooding X with thousands of posts to bury the AI-generated content associated with terms like “taylor swift ai” or “taylor swift deepfake.” The hashtag “PROTECT TAYLOR SWIFT” has gained traction with over 36,000 posts on X.

While some fans are encouraging the identification of users sharing the deepfakes, others are cautioning against responding to harassment with more harassment. The sheer number of participants in the campaign means not all Swifties share the same approach, and some may be mistaken in their targeting.

The proliferation of generative AI technology has allowed deepfake harassment to become widespread. In response, law enforcement agencies have issued warnings about the threat of sextortion. Research from cybersecurity firm Deeptrace shows that about 96% of deepfakes are pornographic and predominantly target women.

This exploitation has extended to school environments, where underage girls have been targeted with nonconsensual deepfakes by their peers. Consequently, protecting Swift is not just about defending a celebrity but setting a precedent that such behavior is unacceptable.

One TikTok user, LeAnn, emphasized the broader significance, stating in a video, “In protecting her, you’re going to be protecting yourself, and your daughters.”

According to 404 Media, the deepfake images originated from a dedicated Telegram chat, where users create nonconsensual explicit content using generative AI tools. Despite Microsoft’s policy against such content, the AI capabilities allow its creation, leading to concerns about the effectiveness of existing safety measures.

Neither Microsoft nor X provided comments before the article was published.

Efforts are underway in Congress to address the criminalization of nonconsensual deepfakes. Virginia has banned deepfake revenge porn, and Representative Yvette Clarke (D-NY) has reintroduced the DEEPFAKES Accountability Act to establish legal protection from abuse using deepfake technology.

The issues surrounding nonconsensual deepfake content highlight the risks posed by the rapid advancement of AI technology. There is a growing need to regulate the development and deployment of these technologies to prevent their misuse.